I recently came across this article, 25 proven tactics to accelerate AI adoption at your company, and loved it! It’s simple to follow and remember, and has tons of examples.

It got me thinking - could we do a similar “guide” for L&D professionals trying to adopt AI in their teams? The purpose would be simple, cut through the noise of “what you should do” and just share what people are actually doing, and what’s working for them.

I naturally turned to my network, because they’re awesome, very into AI, and always open to sharing their practices. So what you see below, it’s 100% thanks to them, their courage to experiment, and openness to share.

.png)

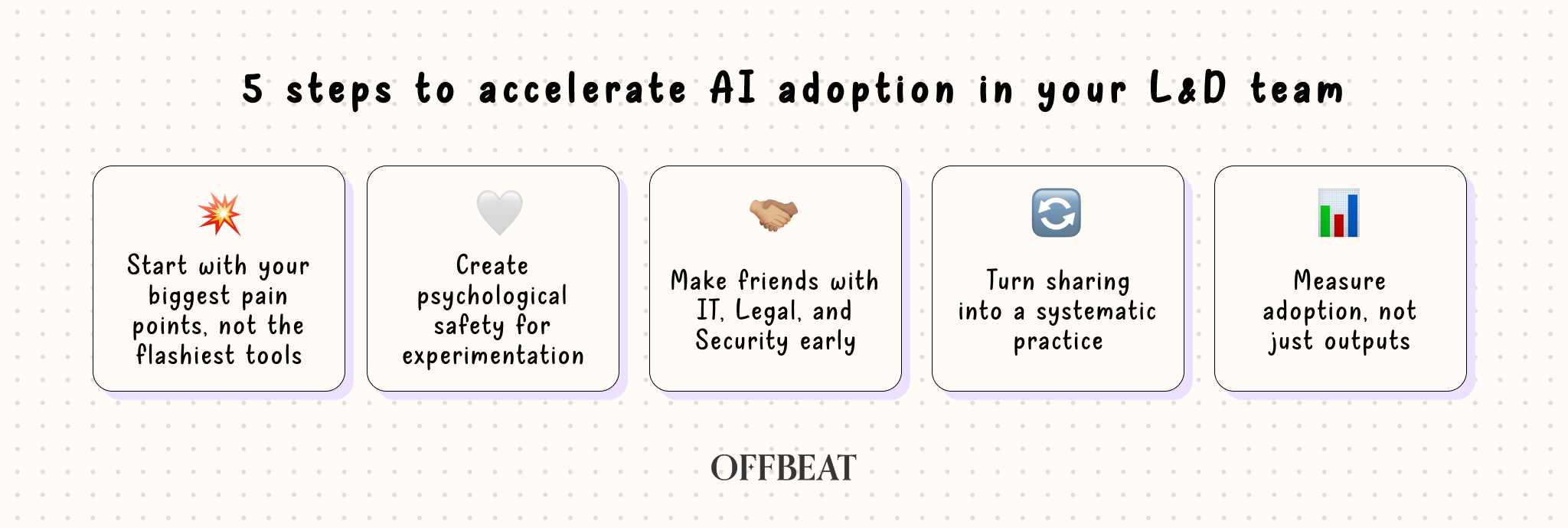

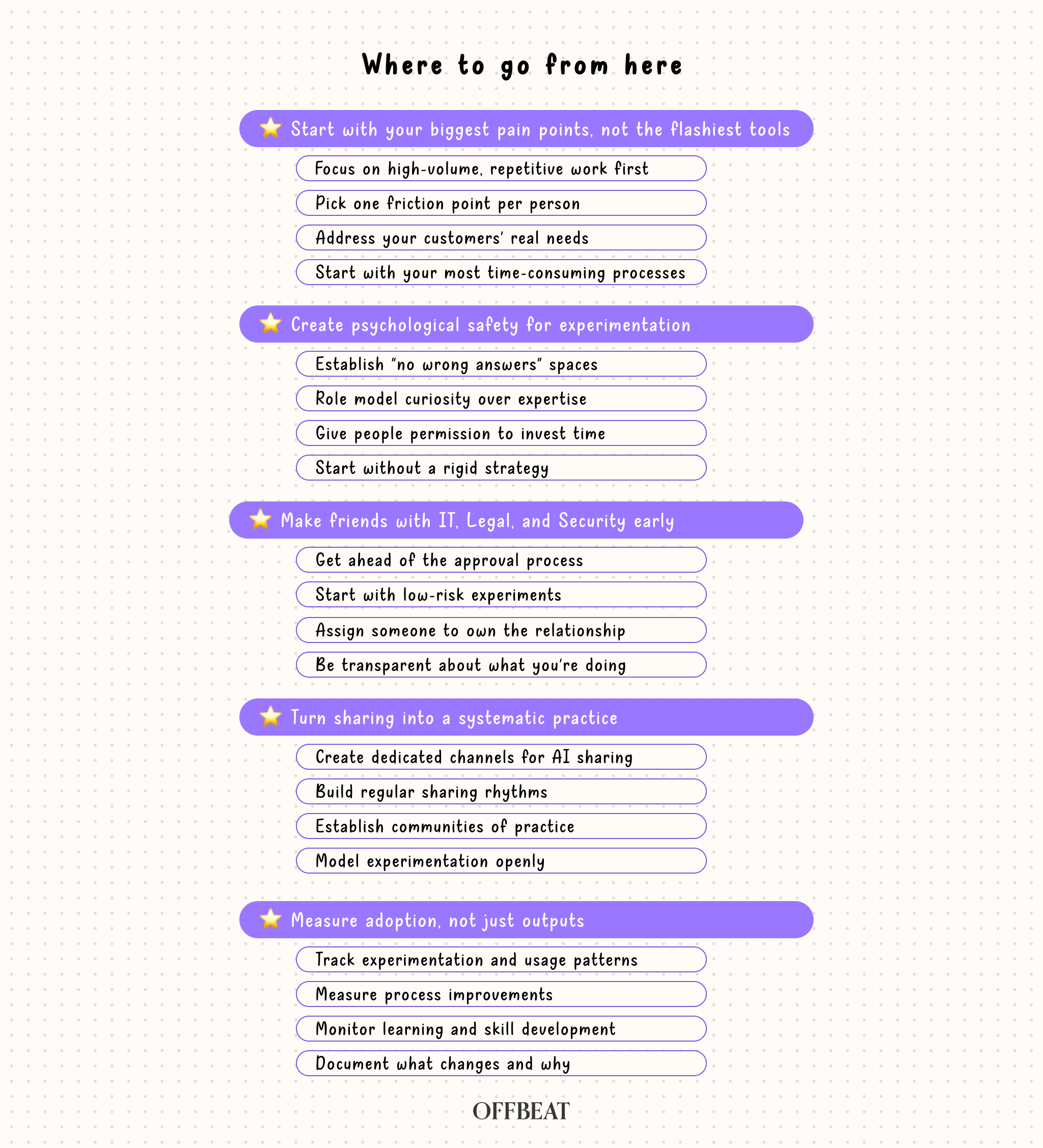

I’ve asked L&D leaders from 9 companies the same questions, and here are the 5 red threads I found in their stories about AI adoption within their teams:

- Start with your biggest pain points, not the flashiest tools

- Create psychological safety for experimentation

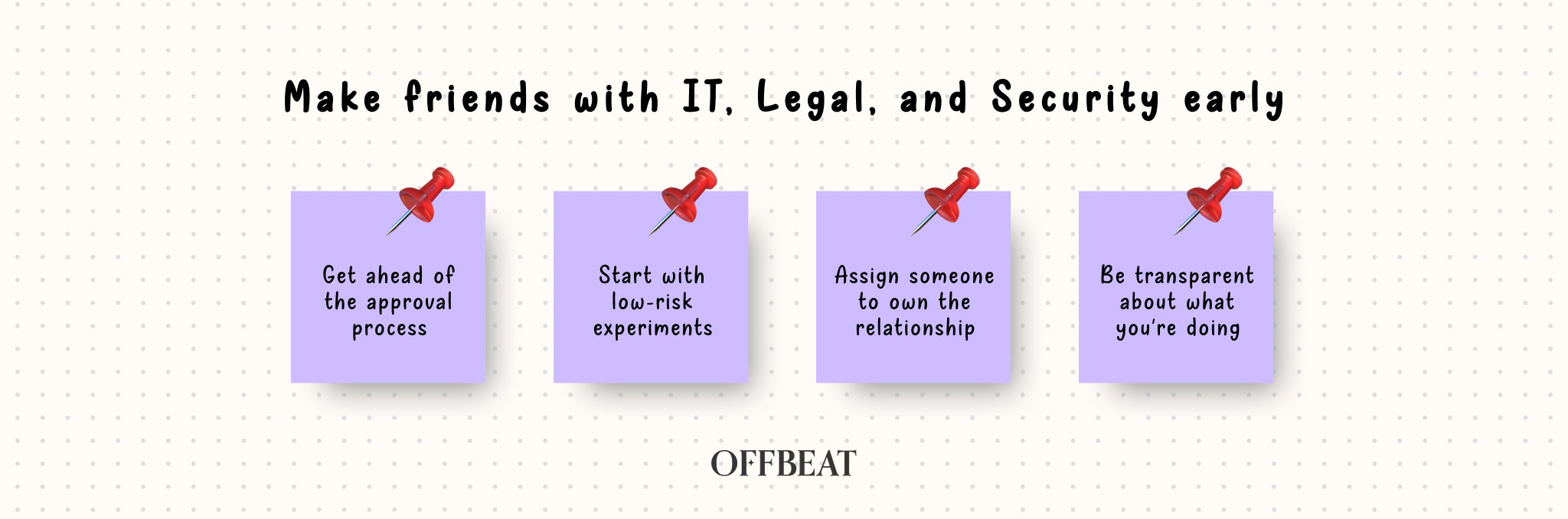

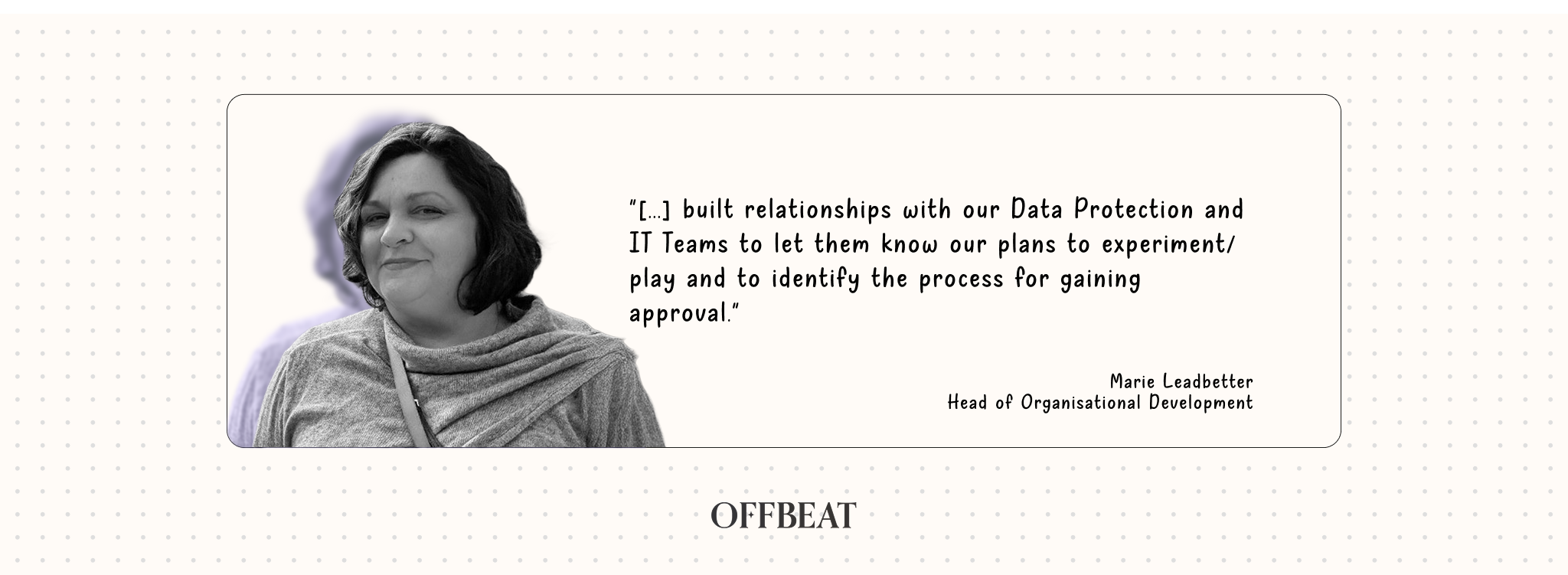

- Make friends with IT, Legal, and Security early

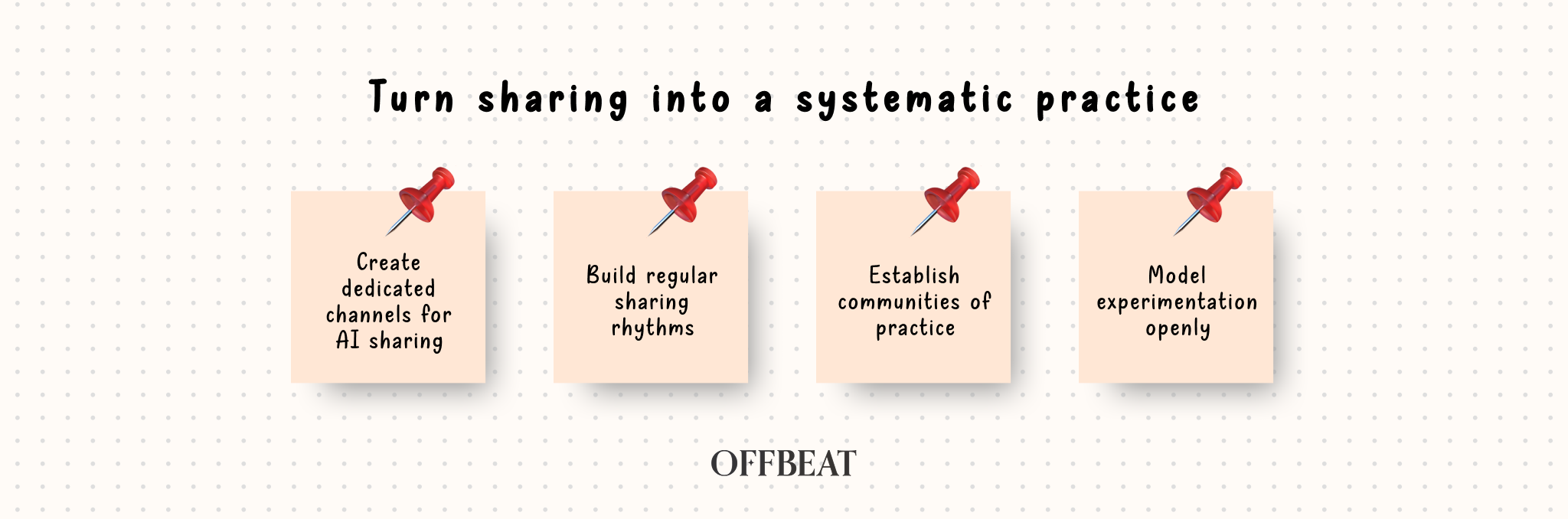

- Turn sharing into a systematic practice

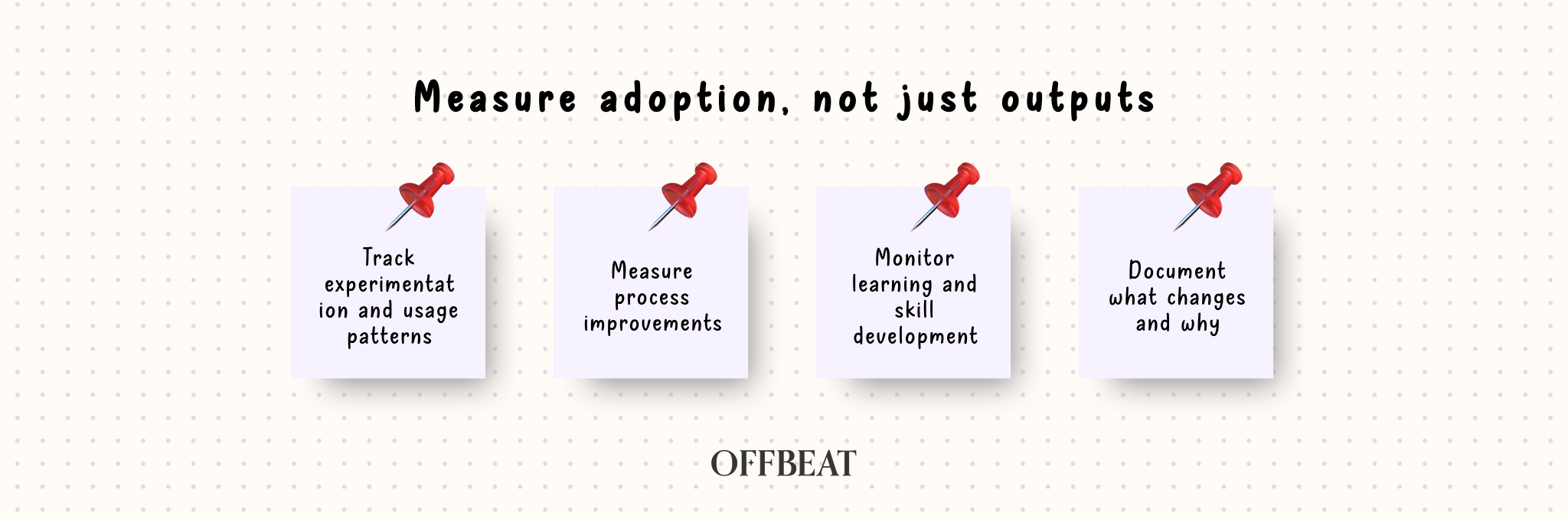

- Measure adoption, not just outputs

Let's break down exactly how these L&D teams made it work.

1. Start with your biggest pain points, not the flashiest tools

Rather than chasing the latest AI tools, several L&D teams found more success by identifying what was eating up the most time and energy in their workflows, then finding AI solutions that could address those specific friction points.

Here's what this looks like in practice:

Focus on high-volume, repetitive work first: Marie at Witherslack Group identified content creation as their perfect storm challenge, "not being able to increase our resource, large volume of learning requirements, increasing geographical spread of learners." They started with Synthesia for quick video creation, creating "something in about 20 minutes that looked polished and professional."

Pick one friction point per person: Christine at BDO USA asked each team member to "run a small, targeted experiment on a real deliverable. Pick one friction point, use AI to remove it, and evaluate effectiveness with a human review." This personalized approach meant everyone found something immediately useful.

Address your customers' real needs: Amanda at e-Core didn't just build content faster, she listened when leadership pipeline participants said "they love when we curate content, but they don't have time to read the materials, and podcasts would be a better fit." She used NotebookLM to turn existing materials into podcasts that fit their actual consumption preferences.

Start with your most time-consuming processes: Chris at Booking.com focused first on "automation within our learning operations" because "we had poor data and lots of manual processes." Rather than jumping to content creation, they addressed foundational workflow issues that were slowing everything else down.

The key insight: successful AI adoption in L&D isn't about using the coolest tools. It's about solving real problems that are already slowing your team down or preventing you from serving your learners better.

2. Create psychological safety for experimentation

Several teams emphasized creating an environment where team members felt safe to experiment, fail, and share what they learned, without judgment or pressure to immediately show ROI.

Here's how they built this safety:

Establish "no wrong answers" spaces: Christine created "a dedicated, psychologically safe place for all interested team members to discuss their thoughts about AI and share their usage stories." Within clear guardrails, "there were no wrong answers" and they could "change directions several times, and shared 'failures', which I still consider wins."

Role model curiosity over expertise: Faye at AELIA emphasized "constant sharing of practices and results as well of prompts, while we also comment on the prompts." She's "persistent in explaining the 'what' and the 'why'" and encourages others to share, even when they're not comfortable yet.

Give people permission to invest time: Emily at CreateFuture's organization "recently released an AI experiment budget, encouraging people to find, adopt and share new AI tools" with "a robust and clear experiment policy" that "makes it less stressful for them to try new things."

Start without a rigid strategy: Eman at talabat describes their initial approach: "There was no strategic approach at the beginning, it was more about wanting to try different things and seeing what worked, or based on what we were working on we used to think of how can AI support us here." This organic exploration helped build confidence before adding more structure.

.png)

.svg)

.png)

.png)

.png)

.png)