In April I attended the Learning Technologies Conference in London, and I swear—there wasn’t a single session without AI in the title. Anamaria Dorgo, my partner-in-crime for the event, said something that stuck with me: “AI won’t be a stand-alone thing. It’ll be embedded in everything we do in L&D.”

She’s right. And in many ways, we’re already wired for this. L&D folks are good at following the shiny, new trend. That might actually be to our advantage this time.

But I also believe that the future isn’t just about integrating AI into what we’re already doing. It’s about reinventing how L&D teams operate from the ground up.

Because like Anamaria said, AI is not an add-on. It’s the infrastructure, the assistant, the lens, and the fuel. To truly make an impact, L&D teams will need to become AI-native, while also looking beyond AI, to unlock both performance and potential for organizations and individuals.

This guide is a deep dive into what we imagine AI-native L&D teams might look like in practice: how they make decisions, what roles they evolve into, how they build with AI, and the mindset shifts that help them do it.

It’s not a futuristic fantasy. It’s a shift already underway in the most forward-thinking organizations.

But the truth is, most of us aren’t there yet. And that’s okay. This isn’t about getting it perfect. It’s about getting started.

Why L&D Needs a New Operating Model

I don’t think we can outrun AI. As controversial as this technology is, it’s here to stay, and we might as well get on board in a smart way.

Dr. Philippa Hardman mentioned in her talk at LTC that L&D teams started using AI massively just last year, largely because many companies finally allowed controlled access. The most common use case? More efficiency and productivity in the design process.

At first, that felt like a red flag to me. I couldn’t shake the feeling that we might be scaling something that’s not really working. And to be honest, I still think that’s a risk. But Myles Runham offered a good counterpoint. AI arrived in a tough economic moment, when most organizations were under pressure to cut costs. Efficiency became the low-hanging fruit.

So yes, it’s understandable that we’re starting with “faster and cheaper.” But if we stop there, we’re missing the point. Because AI isn’t just about helping us do the same things faster. It’s already changing the very assumptions behind how learning and learning design happens.

Think about how we’ve approached online learning for years: static content, end-of-module quizzes, completion rates. It’s a model that prioritizes recall over real-world performance. What agentic AI makes possible is something much more powerful: practice in the flow of work, feedback in real time, learning that’s embedded in doing.

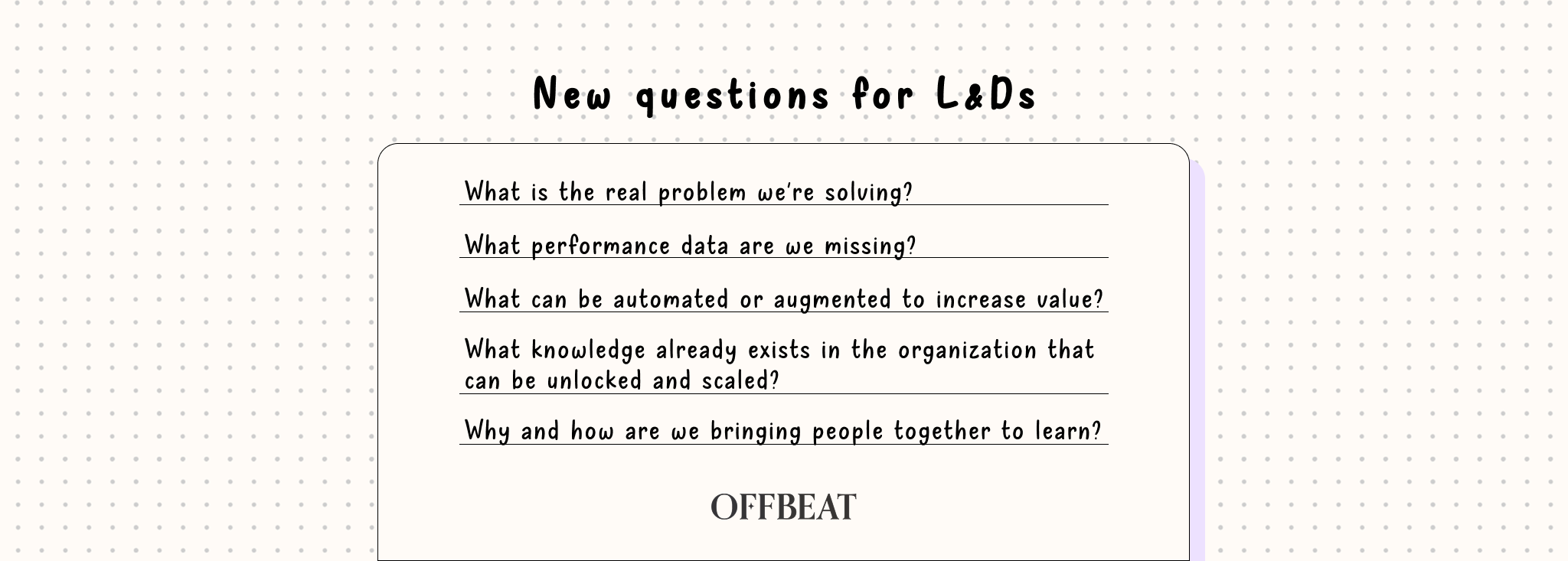

In this context, the core questions for L&D are changing. We're no longer asking, “What training should we build?” Instead, we’re asking:

- What is the real problem we're solving?

- What performance data are we missing?

- What can be automated or augmented to increase value?

- What knowledge already exists in the organization that can be unlocked and scaled?

- Why and how are we bringing people together to learn?

AI-native L&D teams aren’t just adding AI to existing processes. They’re redesigning those processes for a world of continuous, adaptive, and embedded learning.

This requires:

- Moving from courses to systems

- Moving from delivery to enablement

- Moving from evaluation after the fact to real-time intelligence

- Moving from training to experience design

The implication is profound: L&D must become embedded in the flow of work, embedded in decisions, and embedded in performance management. Learning is no longer a break from work, it is work.

And as AI takes over many predictable, repeatable aspects of learning design and delivery, the value of human connection increases. In this context, L&D teams must also reimagine how they help people connect with each other, with ideas, and with purpose. Experience design becomes a strategic lever: it's about crafting moments that invite reflection, spark conversation, foster psychological safety, and build communities of practice. When learning is everywhere, connection becomes the glue that makes it meaningful.

New Thinking: What Sets AI-Native L&D Apart

Okay, so what exactly are the principles that set AI-native L&D teams apart? I’d argue that most of them aren’t new. We’ve been talking about them for years. But now, some are becoming impossible to ignore, while others are showing up for the first time.

In traditional L&D, content is king. The measure of success is often completion rates or course feedback. But in an AI-native world, that mindset no longer holds. These teams approach learning differently, grounded in four core pillars:

1. Performance-first orientation. AI-native teams start with business problems and performance gaps. They don't begin with learning objectives, they begin with outcomes. They reverse-engineer from real-world success metrics: sales improvement, process adherence, faster onboarding, and customer satisfaction.

2. Systemic thinking. They understand that learning is part of a broader system that includes culture, incentives, feedback loops, and workflows. An AI-native L&D team thinks like system designers, constantly tuning inputs and outputs to improve how people learn and perform.

3. Data as design input. AI-native teams don’t wait for post-training surveys to evaluate effectiveness. They continuously feed data into design decisions: behavioral signals, usage stats, feedback simulations, and more. This enables a design process that is iterative, evidence-based, and grounded in what works, not what’s assumed.

4. Design for human connection. And critically, they don't just design content, they design for human connection. Whether through peer feedback rituals, shared reflection prompts, or live collaboration spaces, these teams use experience design to create the conditions where people learn not just from content, but from each other.

This mindset shift is what distinguishes a team that uses AI from one that builds with AI.

What’s Holding L&D Teams Back

Yet, most of us aren’t there yet.

We’ve spoken with dozens of L&D leaders and practitioners over the past few months. Many are curious about AI. Some are experimenting. But very few feel like they’re truly ahead.

Here’s what we’ve heard is getting in the way:

1. Lack of clarity on purpose. Many L&D teams still operate reactively. They respond to training requests rather than leading performance conversations. Without a clear north star, it’s hard to decide how or why to adopt AI.

2. Limited access to tools (or too many tools). Some teams don’t have access to basic AI tools. Others are overwhelmed by choice and don’t know where to start. The result? Either paralysis, or scattered, disconnected experiments.

3. A perception problem. In some organizations, L&D is still seen as an admin function, not a strategic partner. This makes it harder to get buy-in, resources, or even a seat at the AI table.

4. Fear of “doing it wrong”. Let’s be honest, AI can be intimidating. From hallucinations to bias to ethical concerns, it’s easy to feel like you need everything figured out before taking action. But perfectionism holds us back more than it protects us.

5. Forgetting the human. Ironically, in our rush to explore AI, some teams lose sight of what learning is really about: connection, growth, and meaning. If AI doesn’t help us do those better, then what’s the point?

This isn’t about shaming anyone. We’re all figuring it out. But if we want to move forward, we have to be honest about what’s holding us in place.

Ways of Working: Building With, Not Just Using, AI

But while some teams feel paralyzed, others are leaning in, rebuilding how they work from the ground up. What are they doing differently?

1. Front-loaded simulation and prototyping. Before writing content or launching a pilot, teams simulate learner interviews using GPT-4o, predict engagement levels with different formats, generate learner personas, and model skill transfer likelihood.

2. Lean experimentation mindset. Borrowing from agile, these teams launch MVPs of learning products, run small pilots, gather rapid feedback, and iterate weekly. AI tools are used to analyze open-text feedback, simulate reactions, and compare performance data across cohorts.

3. Content creation as a system, not a one-off. Instead of building content from scratch every time, AI-native teams build reusable prompt libraries, generate adaptable templates, and create modular micro-content that is recombined and customized by AI.

4. Multi-tool orchestration. AI-native teams use a toolbox, not a monolith. Tools are chosen for specific stages:

- Perplexity for sourcing evidence-based insights

- Claude for structured writing and standards compliance

- Synthesia for video creation

- Sana, HowNow, or Copilot for automated skills management

- Epiphany for learning design that’s routed in science

- Pika, Imagen, and ElevenLabs for media generation

- DeepL for smart translation

- Whimsical/Napkin for diagrams

A well-orchestrated stack enables speed, consistency, and quality, without locking into a single vendor ecosystem.

5. Gaining time for higher-order thinking. Instead of spending time on manual, and quite boring tasks, AI-native teams, discuss performance issues, review insights to spot patterns across teams, regions, or roles, and also shift their focus from content to connection in trainings and workshops.

This is where AI doesn’t just make us faster, it makes us better. Because when you take away the grunt work, you give people space to be more strategic, reflective, and human.

.png)

.svg)

.png)

.png)

.png)

.png)